7 The 4th Industrial Revolution

Engineering Ethics in the Machine Age

Key Themes & Ideas

- The Fourth Industrial Revolution, characterized by increasing interconnectivity and smart automation, is likely to drastically alter our social landscape

- The use of “Big Data”, machine learning, and algorithmic processing presents important social opportunities but also threatens grave personal and social harms

- The ethical use of Artificial Intelligence can help us solve previously intractable human and social problems

- Artificial Intelligence may create ethical concern from misuse, under-use, and over-use

- The Principles of Engineering Ethics, suitably refined and supplemented by a Principle of Explicability, can provide a valuable framework for ethical AI development and implementation

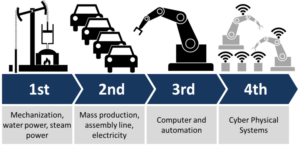

We are in the midst of the Fourth Industrial Revolution. Taking off where the Digital Revolution – the Third Industrial Revolution – ended, this new Machine Age is characterized by increasing interconnectivity and smart automation. This fourth industrial revolution is made possible by advancements in data science, artificial intelligence, and robotics which are beginning to blur the lines between the physical, digital, and biological worlds. According to many, the Fourth Industrial Revolution is especially characterized by an augmented social reality where nearly all of our interactions in the world are mediated by some form of digital technology.

We are in the midst of the Fourth Industrial Revolution. Taking off where the Digital Revolution – the Third Industrial Revolution – ended, this new Machine Age is characterized by increasing interconnectivity and smart automation. This fourth industrial revolution is made possible by advancements in data science, artificial intelligence, and robotics which are beginning to blur the lines between the physical, digital, and biological worlds. According to many, the Fourth Industrial Revolution is especially characterized by an augmented social reality where nearly all of our interactions in the world are mediated by some form of digital technology.

This new revolution offers great opportunity but also threatens great peril. Some of what it offers is not new: the looming threat of job loss due to automation has been with us since the First Industrial Revolution. But it does increase this and other old threats, for the greater power of these new technologies make the potential harms more probable. When even creative jobs can be done by machines, what is left for human beings? Still other opportunities and risks are new, driven by wholly new possibilities for knowledge and interaction.

If we are to drive the Fourth Industrial Revolution, rather than simply being caught in its wake, it will be essential that we have a grip on what is possible and what is at stake. In this chapter, then, we examine two of the major drivers of this revolution: Big Data and Artificial Intelligence. As we will see, these drivers overlap as a great deal of what matters about Big Data is the result of at least basic forms of Artificial Intelligence: machine learning, algorithmic processing, etc.

1. Big Data & Algorithmic Processing

In contrast to (for instance) the engineering of bridges and airplanes, data practice has a much broader ethical sweep: it has the potential to significantly impact all of the fundamental interests of human beings. While unethical choices in bridge or airplane design may result in the loss of life or health, unethical choices in data practice can do this and more – it can ruin reputations, savings, or rob someone of their liberty. In contrast, of course, this also means that data practice holds out the hope of benefitting individuals and society in many more ways as well. Nonetheless, what this all suggests is that the ethical landscape for data practitioners, software engineers, and others who work with data is even more complex than that faced by other types of technology professionals.

To start to get a feel for the complexities of data practice, it will be useful to consider some of the major potential benefits and risks of data. We’ll begin by examining the promise of a data-fueled world.[1]

1.1. The Promise of Big Data

The promise of big data can be grouped into three main types of benefits: (1) Improving our understanding of ourselves and the world; (2) improving social, institutional, and economic efficiency; and (3) predictive accuracy and personalization.

1.1.1. Human Understanding

Data and its associated practices can uncover previously unrecognized correlations and patterns in the world. In so doing, data can greatly enrich our understanding of ethically significant relationships—in nature, society, and our personal lives. Understanding the world is good in itself, but also, the more we understand about the world and how it works, the more intelligently we can act in it. Data can help us to better understand how complex systems interact at a variety of scales: from large systems such as weather, climate, markets, transportation, and communication networks, to smaller systems such as those of the human body, a particular ecological niche, or a specific political community, down to the systems that govern matter and energy at subatomic levels. Data practice can also shed new light on previously unseen or unattended harms, needs, and risks. For example, big data practices can reveal that a minority or marginalized group is being harmed by a drug or an educational technique that was originally designed for and tested only on a majority/dominant group, allowing us to innovate in safer and more effective ways that bring more benefit to a wider range of people.

1.1.2. Social, Institutional, and Economic Efficiency

Once we have a more accurate picture of how the world works, we can design or intervene in its systems to improve their functioning. This reduces wasted effort and resources and improves the alignment between a social system or institution’s policies/processes and our goals. For example, big data can help us create better models of systems such as regional traffic flows, and with such models we can more easily identify the specific changes that are most likely to ease traffic congestion and reduce pollution and fuel use—ethical significant gains that can improve our happiness and the environment. Data used to better model voting behavior in a given community could allow us to identify the distribution of polling station locations and hours that would best encourage voter turnout, promoting ethically significant values such as citizen engagement. Data analytics can search for complex patterns indicating fraud or abuse of social systems. The potential efficiencies of big data go well beyond these examples, enabling social action that streamlines access to a wide range of ethically significant goods such as health, happiness, safety, security, education, and justice.

1.1.3. Predictive Accuracy and Personalization

Not only can good data practices help to make social systems work more efficiently, as we saw above, but they can also be used to more precisely tailor actions to be effective in achieving good outcomes for specific individuals, groups, and circumstances, and to be more responsive to user input in (approximately) real time. Of course, perhaps the most well-known examples of this advantage of data involves personalized search and serving of advertisements. Designers of search engines, online advertising platforms, and related tools want the content they deliver to you to be the most relevant to you, now. Data analytics allow them to predict your interests and needs with greater accuracy. But it is important to recognize that the predictive potential of data goes well beyond this familiar use, enabling personalized and targeted interactions that can deliver many kinds of ethically significant goods. From targeted disease therapies in medicine that are tailored specifically to a patient’s genetic fingerprint, to customized homework assignments that build upon an individual student’s existing skills and focus on practice in areas of weakness, to predictive policing strategies that send officers to the specific locations where crimes are most likely to occur, to timely predictions of mechanical failure or natural disaster, a key goal of data practice is to more accurately fit our actions to specific needs and circumstances, rather than relying on more sweeping and less reliable generalizations. In this way the choices we make in seeking the good life for ourselves and others can be more effective more often, and for more people.

1.2. The Perils of Big Data

Many of the major potential benefits of big data are quite obvious once we have a basic understanding of what is possible. The risks, however, can be more difficult to see. Nonetheless, we can loosely group them into 3 main categories: (1) Threats to privacy and security; (2) Threats to fairness and justice; and (3) Threats to transparency and autonomy.

1.2.1. Threats to Privacy & Security

Thanks to the ocean of personal data that humans are generating today (or, to use a better metaphor, the many different lakes, springs, and rivers of personal data that are pooling and flowing across the digital landscape), most of us do not realize how exposed our lives are, or can be, by common data practices.

Even anonymized datasets can, when linked or merged with other datasets, reveal intimate facts (or in many cases, falsehoods) about us. As a result of your multitude of data-generating activities (and of those you interact with), your sexual history and preferences, medical and mental health history, private conversations at work and at home, genetic makeup and predispositions, reading and Internet search habits, political and religious views, may all be part of data profiles that have been constructed and store somewhere unknown to you, often without your knowledge or informed consent. Such profiles exist within a chaotic data ecosystem that gives individuals little to no ability to personally curate, delete, correct, or control the release of that information. Only thin, regionally inconsistent, and weakly enforced sets of data regulations and policies protect us from the reputational, economic, and emotional harms that release of such intimate data into the wrong hands could cause. In some cases, as with data identifying victims of domestic violence, or political protestors or sexual minorities living under oppressive regimes, the potential harms can even be fatal.

And of course, this level of exposure does not just affect you but virtually everyone in a networked society. Even those who choose to live ‘off the digital grid’ cannot prevent intimate data about them from being generated and shared by their friends, family, employers, clients, and service providers. Moreover, much of this data does not stay confined to the digital context in which it was originally shared. For example, information about an online purchase you made in college of a politically controversial novel might, without your knowledge, be sold to third-parties (and then sold again), or hacked from an insecure cloud storage system, and eventually included in a digital profile of you that years later a prospective employer or investigative journalist could purchase. Should you, and others, be able to protect your employability or reputation from being irreparably harmed by such data flows? Data privacy isn’t just about our online activities, either. Facial, gait, and voice-recognition algorithms, as well as geocoded mobile data, can now identify and gather information about us as we move and act in many public and private spaces.

Unethical or ethically negligent data privacy practices, from poor data security and data hygiene, to unjustifiably intrusive data collection and data mining, to reckless selling of user data to third parties, can expose others to profound and unnecessary harms.

1.2.2. Threats to Fairness & Justice

We all have a significant life interest in being judged and treated fairly, whether it involves how we are treated by law enforcement and the criminal and civil court systems, how we are evaluated by our employers and teachers, the quality of health care and other services we receive, or how financial institutions and insurers treat us.

All of these systems are being radically transformed by new data practices and analytics, and the preliminary evidence suggests that the values of fairness and justice are too often endangered by poor design and use of such practices. The most common causes of such harms are arbitrariness, avoidable errors and inaccuracies, and unjust and often hidden biases in datasets and data practices.

For example, investigative journalists have found compelling evidence of hidden racial bias in data-driven predictive algorithms used by parole judges to assess convicts’ risk of reoffending.[2] Of course, bias is not always harmful, unfair, or unjust. A bias against, for example, convicted bank robbers when reviewing job applications for an armored-car driver is entirely reasonable! But biases that rest on falsehoods, sampling errors, and unjustifiable discriminatory practices are all too common in data practice.

Typically, such biases are not explicit, but implicit in the data or data practice, and thus harder to see. For example, in the case involving racial bias in criminal risk-predictive algorithms cited above, the race of the offender was not in fact a label or coded variable in the system used to assign the risk score. The racial bias in the outcomes was not intentionally placed there, but rather ‘absorbed’ from the racially-biased data the system was trained on. We use the term proxies to describe how data that are not explicitly labeled by race, gender, location, age, etc. can still function as indirect but powerful indicators of those properties, especially when combined with other pieces of data. A very simple example is the function of a zip code as a strong proxy, in many neighborhoods, for race or income. So, a risk-predicting algorithm could generate a racially-biased prediction about you even if it is never ‘told’ your race. This makes the bias no less harmful or unjust; a criminal risk algorithm that inflates the actual risk presented by black defendants relative to otherwise similar white defendants leads to judicial decisions that are wrong, both factually and morally, and profoundly harmful to those who are misclassified as high-risk. If anything, implicit data bias is more dangerous and harmful than explicit bias, since it can be more challenging to expose and purge from the dataset or data practice.

In other data practices the harms are driven not by bias, but by poor quality, mislabeled, or error-riddled data (i.e., ‘garbage in, garbage out’); inadequate design and testing of data analytics; or a lack of careful training and auditing to ensure the correct implementation and use of the data system. For example, such flawed data practices by a state Medicaid agency in Idaho led it to make large, arbitrary, and very possibly unconstitutional cuts in disability benefit payments to over 4,000 of its most vulnerable citizens.[3] In Michigan, flawed data practices led another agency to levy false fraud accusations and heavy fines against at least 44,000 of its innocent, unemployed citizens for two years. It was later learned that its data-driven decision-support system had been operating at a shockingly high false-positive error rate of 93 percent.[4]

While not all such cases will involve datasets on the scale typically associated with ‘big data’, they all involve ethically negligent failures to adequately design, implement and audit data practices to promote fair and just results. Such failures of ethical data practice, whether in the use of small datasets or the power of ‘big data’ analytics, can and do result in economic devastation, psychological, reputational, and health damage, and for some victims, even the loss of their physical freedom.

1.2.3. Threats to Transparency and Autonomy

Transparency is an important procedural value that emphasizes the importance of being able to see how a given social system or institution works, as well as being able to inquire about the basis of life-affecting decisions made within that system or institution. So, for example, if your bank denies your application for a home loan, transparency will be served by you having access to information about exactly why you were denied the loan, and by whom.

Transparency is importantly related to autonomy – the ability to govern the course of one’s own life. To be effective at steering the course of my own life (to be autonomous), I must have a certain amount of accurate information about the other forces acting upon me in my social environment (that is, I need some transparency in the workings of my society). Consider the example given above: if I know why I was denied the loan, I can figure out what I need to change to be successful in a new application, or in an application from another bank. The fate of my aspiration to home ownership remains at least somewhat in my control. But if I have no information to go on, then I am blind to the social forces blocking my aspiration, and have no clear way to navigate around them. Data practices have the potential to create or diminish social transparency, but diminished transparency is currently the greater risk because of two factors.

The first risk factor has to do with the sheer volume and complexity of today’s data, and of the algorithmic techniques driving big data practices. For example, machine learning algorithms trained on large datasets can be used to make new assessments based on fresh data; that is why they are so useful. The problem is that especially with ‘deep learning’ algorithms, it can be difficult or impossible to reconstruct the machine’s ‘reasoning’ behind any particular judgment.[5] This means that if my loan was denied on the basis of this algorithm, the loan officer and even the system’s programmers might be unable to tell my why—even if they wanted to. And it is unclear how I would appeal such an opaque machine judgment, since I lack the information needed to challenge its basis. In this way my autonomy is restricted. Because of the lack of transparency, my choices in responding to a life-affecting social judgment about me have been severely limited.

The second risk factor is that often, data practices are cloaked behind trade secrets and proprietary technology, including proprietary software. While laws protecting intellectual property are necessary, they can also impede social transparency when the protected property (the technique or invention) is a key part of the mechanisms of social functioning. These competing interests in intellectual property rights and social transparency need to be appropriately balanced. In some cases the courts will decide, as they did in the aforementioned Idaho case. In that case, K.W. v. Armstrong, a federal court ruled that citizens’ due process was violated when, upon requesting the reason for the cuts to their disability benefits, the citizens were told that trade secrets prevented releasing that information. Among the remedies ordered by the court was a testing regime to ensure the reliability and accuracy of the automated decision-support systems used by the state.

However, not every obstacle to data transparency can or should be litigated in the courts. Securing an ethically appropriate measure of social transparency in data practices will require considerable public discussion and negotiation, as well as good faith efforts by data practitioners to respect the ethically significant interest in transparency.

2. Ethics & Artificial Intelligence

Artificial Intelligence (AI) has the power to drastically reshape human existence. Indeed, the discussion of big data and algorithmic processing in the previous sections shows how some lesser forms of AI already have reshaped our lives. But AI is more than machine learning and algorithms (although that is part of it). Given the increasing involvement of AI in our lives, it is more essential than ever that we have a framework for understanding how to develop AI for the social good. And this is precisely the task that the AI4People initiative set for itself: to understand the core opportunities and risks associated with AI and to recommend an ethical framework that should undergird the development and adoption of AI technologies.[6]

In what follows, we will summarize and synthesize some of the key findings of the AI4People initiative and its foundations for a “Good AI Society”.

2.1. Risks & Opportunities of AI

Starting from the position that AI will (and already does) have a major impact on society, the focus for ethical investigation is on what sort of impact(s) it will have. Rather than asking whether AI will have an impact, the focus is who will be impacted, how will they be impacted, where will we see the impacts, and when will we see the various impacts?

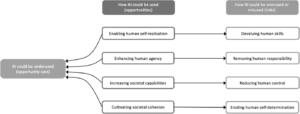

To investigate those questions in a more useful way, AI4People identified four broad ways AI may improve or threaten key aspects of society and human existence. They explain their approach thusly:

In line with this analysis, they offer us the following graphical representation of the related risks and opportunities. On the back of this graphical representation, we will briefly explore each of the key ideas.

2.1.1. Self-realization without devaluing human abilities

AI may enable self-realization: the ability for people to flourish in terms of their own characteristics, interests, potential abilities or skills, aspirations and life projects. Indeed, many technological innovations have done this. The creation of laundry machines liberated people – particularly women – from the drudgery of domestic work. And so, similarly, various forms of “smart” automation present us with the opportunity to free up time for cultural, intellectual and social pursuits.

The fact that some skills will be made obsolete while other skills will emerge (or emerge as newly valuable) should not be a concern in itself, for that is simply a fact of life. However, this sort of change can be concerning for two reasons: the pace at which it happens and the unequal distribution of benefits and burdens that result. If old skills are quickly devalued or rendered obsolete then we are likely to see significant disruptions of the job market and the nature of employment. For individuals, this could be troublesome as work is often intimately linked to personal identity, self-esteem, and social role or standing. So even ignoring the potential economic harm (which could be accounted for through policy), such disruption raises issues. From a societal perspective, as AI begins to replace sensitive, skill-intensive domains such as health care diagnosis or aviation, there is a risk of vulnerability in the event of AI malfunction or an adversarial attack.

In short, change will occur but the aim is for the change to be as fair as possible.

2.1.2. Enhancing human agency without removing human responsibility

AI provides an ever growing reservoir of “smart agency”. In augmenting human intelligence, it will make it possible for us to do more, do it better, and do it faster. However, in giving decision-making over to AI, we risk a black hole of responsibility. One major concern with AI development is the “black box” mentality that sees AI decision-making as beyond human understanding and control. Thus, it is important we think clearly about how much and what sorts of agency we delegate to AI.

Helpfully, the relationship between the degree and quality of agency that people enjoy and how much agency we delegate to autonomous systems is not zero-sum. If developed thoughtfully, AI offers the opportunity of improving and multiplying the possibilities for human agency. Human agency may be ultimately supported, refined, and expanded by the embedding of facilitating frameworks, designed to improve the likelihood of morally good outcomes, in the set of functions that we delegate to AI systems.

2.1.3. Increasing societal capabilities without reducing human control

AI offers the opportunity to prevent and cure diseases, optimize transportation and logistics, and much more. AI presents countless possibilities for reinventing society by radically enhancing what humans are collectively capable of. More AI may support better coordination, and hence more ambitious goals. Augmenting human intelligence with AI could find new solutions to old and new problems, including a fairer or more efficient distribution of resources and a more sustainable approach to consumption.

Precisely because such technologies have the potential to be so powerful and disruptive, they also introduce proportionate risks. If we rely on AI to augment our abilities in the wrong way, we may delegate important tasks and decisions to autonomous systems that should remain at least partly subject to human supervision and choice. This may result in us losing the ability to monitor the performance of these systems or preventing or redressing errors or harms that arise.

2.1.4. Cultivating societal cohesion without eroding human self-determination

Many of the world’s most difficult problems – climate change, antimicrobial resistance, and nuclear proliferation – are difficult precisely because they exhibit high degrees of coordination complexity: they can only be tackled successfully if all stakeholders co-design and co-own the solutions and cooperate to bring them about. A data-intensive, algorithmic-driven approach using AI could help deal with such coordination complexity and thereby support greater cohesion and collaboration. For instance, AI could be used to support global emissions cuts as a means of combatting climate change, perhaps as a means of “self-nudging” whereby the system is set up to monitor and react to changes in emissions without human input, thereby eliminating (or at least reducing) the common problem we face where nations agree to cuts but never carry out the necessary tasks.

Of course, such use of AI systems may threaten human self-determination as well. They could lead to unplanned or unwelcome changes in human behaviors. More generally, we may feel controlled by the AI if we allow it to be used for “nudging” in a variety of areas of our lives.

2.2. Principles of Ethical AI

In light of these opportunities and risks, the AI4People group proposes an ethical framework based on the bioethical principles – the same principles we borrowed for thinking about experimental technology. But they also added a new principle – explicability – which becomes particularly important in the context of AI.

The relevance of the Principle of Welfare to AI should be clear from the earlier discussion. AI should be used to improve human and environmental well-being, and it certainly has that potential. But of course, in the process of using AI to improve the public welfare we must be on guard to the variety of harms AI can cause. This includes the harms resulting both from overuse – which may often be accidental – and misuse – which is likely deliberate.

The Principle of Autonomy takes on a new life in the context of AI, as consideration of the opportunities and risks should evidence. Because adopting AI and its smart agency can involve willingly giving up some of our decision-making power to machines, it raises the specter of autonomously giving up our autonomy. As such, in the AI context, the principle of autonomy should focus on striking a balance between the decision-making power we retain for ourselves and that which we delegate to artificial agents.

The group thus suggests that what is most important in the context of AI is “meta-autonomy”, or a decide-to-delegate model. They suggest that “humans should always retain the power to decide which decisions to take, exercising the freedom to choose where necessary, and ceding it in cases where overriding reasons, such as efficacy, may outweigh the loss of control over decision-making.” And any time a decision to delegate has been made, it should be reversible.

The Principle of Fairness applies to AI in at least three key ways. First, it is suggested that AI should be used to correct past wrongs such as by eliminating unfair discrimination. We know that human beings have biases and that we are often unaware of our biases and how they influence our decision-making. At least in principle, a machine could be immune to the sort of implicit biases that plague human psychology. Second, fairness demands that the benefits and burdens of the use of AI are fairly distributed. And third, we must be on guard against AI undermining important social institutions and structures. For example, the rising use of AI and algorithms in healthcare could be seen, if carried out improperly, as a threat to our trust in the healthcare system.

Finally, the researchers introduce a new Principle of Explicability. This principle suggests that the creation and use of AI must be intelligible – it should be possible for humans to understand how it works – and must promote accountability – it must be possible for us to determine who is responsible for the way it works. On the group’s view, this principle enables all the others: To adjudicate whether AI is promoting the social good and not causing harm, we must be able to understand what it is doing and why. To truly retain autonomous control even as we delegate tasks, we must know how the AI will carry out those tasks. And, finally, to ensure fairness, it must be possible to hold people accountable if the AI produces negative outcomes, if for no other reason than to figure out who is responsible for fixing the problem.

Check Your Understanding

After successfully completing this chapter, you should be able to answer all the following questions:

- What is an augmented social reality and how does it relate to the Fourth Industrial Revolution?

- What are the main types of benefits and risks associated with Big Data?

- In the context of data science, what is a proxy? What are its ethical implications?

- What is the value of Transparency concerned with? How does it relate to The Principle of Explicability?

- What are some of the major risks and opportunities associated with Artificial Intelligence?

- What does human self-realization involve? How might AI facilitate it?

- In the context of AI, what are Facilitating Frameworks?

- What does it mean for a social problem to exhibit a high degree of Coordination Complexity? How might AI help with deal with such a problem?

- What is a Decide-to-Delegate Model? How does it relate to autonomy?

- The following discussion borrows heavily from Shannon Vallor’s “An Introduction to Data Ethics” teaching module. The original can be found at https://www.scu.edu/ethics/focus-areas/technology-ethics/resources/an-introduction-to-data-ethics/ ↵

- Angwin, et al. (2016). “Machine Bias”, ProPublica. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing ↵

- Jay Stanley (2017). “Pitfalls of Artificial Intelligence Decisionmaking Highlighted in Idaho ACLU Case,” American Civil Liberties Union. https://www.aclu.org/blog/privacy-technology/pitfalls-artificial-intelligence-decisionmaking-highlighted-idaho-aclu-case ↵

- Paul Egan (2017). “Data glitch was apparent factor in false fraud charges against jobless claimants,” Detroit Free Press. ↵

- Will Knight (2017). “The Dark Secret at the Heart of AI,” MIT Technology Review. https://www.technologyreview.com/s/604087/the-dark-secret-at-the-heart-of-ai/ ↵

- Luciano Floridi, et al. (2018). “AI4People – An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations,” Minds and Machines. ↵