3 Technology in Society

Key Themes & Ideas

- Engineering and technology function in a social context

- Technology is best understood as a techno-social system

- Technology mediates our experience of and interaction with the world

- The Control Dilemma shows us that our ability to control the effects of technology are inversely related to our ability to anticipate those effects

- In mediating our world, technology can also change what we value or how we understand our values and principles

- Technology can intentionally and implicitly mediate perceptions and actions

- Technological mediations can take many forms, but the three most common are guidance, persuasion, and coercion

As it departed on its maiden voyage in April 1912, the Titanic was proclaimed the greatest engineering achievement ever.[1] Not merely was it the largest ship the world had seen, having a length of almost three football fields; it was also the most glamorous of ocean liners, complete with a tropical vinegarden restaurant and the first seagoing masseuse. It was touted as the first fully safe ship. Since the worst collision envisaged was at the juncture of two of its sixteen watertight compartments, and since it could float with any four compartments flooded, the Titanic was believed to be virtually unsinkable.

Buoyed by such confidence, the captain allowed the ship to sail full speed at night in an area frequented by icebergs, one of which tore a large gap in the ship’s side, flooding five compartments. Time remained to evacuate the ship, but there were not enough lifeboats to accommodate all the passengers and crew. British regulations then in effect did not foresee vessels of this size. Accordingly, only 825 places were required in lifeboats, sufficient for a mere one-quarter of the Titanic’s capacity of 3547 passengers and crew. No extra precautions had seemed necessary for an unsinkable ship. The result: 1522 dead (drowned or frozen) out of the 2227 on board for the Titanic’s first trip.[2]

The Titanic remains a haunting image of technological complacency. So many products of technology present some potential dangers that engineering should be regarded as an inherently risky activity. In order to underscore this fact and help to explore its ethical implications, we suggest that engineering should be viewed as an experimental process. It is not, of course, an experiment conducted solely in a laboratory under controlled conditions. Rather, it is an experiment on a social scale involving human subjects.

There are conjectures that the Titanic left England with a coal fire on board, that this made the captain rush the ship to New York, and that water entering the coal bunkers through the gash caused an explosion and greater damage to the compartments. Others maintain that embrittlement of the ship’s steel hull in the icy waters caused a much larger crack than a collision would otherwise have produced. Shipbuilders have argued that carrying the watertight bulkheads up higher on such a big ship instead of allowing less obstructed space on the passenger decks for arranging cabins would have kept the ship afloat. However, what matters most is that the lack of lifeboats and the difficulty of launching those available from the listing ship prevented a safe exit for two-thirds of the persons on board, where a safe exit is a mechanism or procedure for escape from harm in the event a product fails.

1. The Social Context of Engineering & Technology

Engineering does not occur in a vacuum and technology is neither created nor maintained in a vacuum. Every individual engineer and every engineering firm or company is embedded in society. As such, both engineers as people and engineering as a profession are influenced by their society while also having the power to influence that society going forward. Consider, for instance, the lack of lifeboats on the Titanic. The engineers who signed off on the designs with such limited lifeboats did so, in part, because that is all society required of them via its regulations.

Not only do the law and official regulations influence engineering decisions, but so does public perception. Consider the idea of ‘efficiency’, a common term in engineering and technology. It is generally agreed that it is better to make an activity more efficient and, within the technical sciences, ‘efficiency’ is often understood in a purely quantitative way: it is a ratio of energy input to energy output. However, there are a variety of likely ‘efficient’ changes we could make (or previously had) which are nevertheless excluded from consideration: child labor was in many ways more efficient than adult labor and yet no one currently builds machinery that is reliant on a small child being able to fit into certain crevices.

Other examples abound. For instance, Trevor Pinch and Wiebe Bijker showed that social forces directed the development path of bicycles in their early history.[3] Early on, there were two types of bicycles: a sporting bicycle with a high front wheel that made it fast but unstable and a more “utilitarian” design with a smaller front wheel to promote stability at the expense of speed. Although originally designed for different purposes – the sporting bicycle for athletes and the other for ordinary transportation – the sporting bicycle never really caught on and eventually disappeared. Society, rather than the designers, determined that the sporting bicycle was unnecessary.

In the other direction, we can see the way technology has influenced and changed society, often in unexpected or unpredictable ways. There are obvious cases: speed bumps effectively force people to drive more slowly and walking paths and streetlamps encourage foot traffic to follow specified paths. But there are also less obvious cases: the invention of the printing press revolutionized European civilization in many ways, including being a major contributing factor in the Protestant Reformation, thus drastically changing the religious landscape of the entire world. More recent technologies like cell phones and social media have also heavily influenced social relationships by encouraging instantaneous and constant communication and altering what it means to call someone a “friend”.

And then there are the more general ways technology often influences society: changing what we consider possible, required, or impermissible. Before advances in medical technologies, we would simply expect someone to die from cancer but now because it is possible to treat many cancers we find it morally problematic when those treatments are not available to someone. Technology also changes what we can expect from our lives: what sorts of interactions are possible, where it is possible to live and work, what sorts of jobs even exist.

In short, all of these examples come together to show that engineering and technology function in a social context: technology simultaneously exerts influence over society and is influenced by society. This, then, implies that technology professionals both exert influence over society through their designs and, in turn, are influenced by various social factors in the creation and deployment of their designs.

Objects make us, in the very same process that we make them.[4]Engineering’s influence on society is most evident in the context of disruptive technologies: technologies that significantly alter the way individuals, industries, businesses, or society at large operate. The internet and, later, smartphones, are both prime examples of disruptive technologies that have substantially changed our social world. Looking ahead, we can predict that technologies like Generalized Artificial Intelligence, Virtual Reality, and perhaps Blockchain will all end up, if embraced by society, radically transforming the social world as well. But even less “innovative” technologies can be disruptive: autonomous vehicles are not that far removed from existing vehicles in many ways and yet we can imagine that a society which has embraced autonomous vehicles may look very different from our own. For instance, imagine the changes to peoples’ daily lives and physical and mental health if traffic jams and collisions were a thing of the past!

Examples of the Social Context of Technology

- Although human and non-human animal cloning is technically feasible, it is widely opposed by (nearly) every society and thus has barely progressed

- We have substantial power to genetically modify crops but genetic modification is heavily opposed in certain areas of the world, such as Europe and some areas of Africa, thus stifling development

- Many towns and cities in the United States are designed around the personal automobile, indicating the impact personal automobiles have had on American society

- More and more of our social interactions are now technologically mediated: we speak via phones, use video chat, text message, play online video games with text and voice chat, etc.

- A significant portion of contemporary occupations only exist because of various enabling technologies such as computers and automobiles

2. Techno-Social Systems

There is a deeper way in which technology functions in a social context. Our previous examples of technology affecting society and society affecting technology largely treated society and technology as distinct even if interactive. But that is not quite an accurate picture, for in reality technology is embedded in society and it only functions as part of a system composed of both human/social elements and technological elements. Whether a piece of technology does what it was designed to do is not merely a matter of its technical design, but also how it ties into relevant social structures.

To put this another way, if we really want to understand the function of any piece of technology, we cannot simply examine the technological artifact in isolation. There is no sense to be made of what a smartphone does without reference to the broader social world in which it is embedded. This includes the background conditions that make its functions possible, such as computer chips and cellular data towers. But it also includes relevant social conditions: people having a desire to communicate with each other or have instant access to distraction.

All this is to say that when we speak of technology, for the purposes of understanding it, we are really speaking of techno-social systems: the complex interactions between technological artifacts and aspects of the social world. Understanding this can open up new avenues for exploration and development: for it encourages us to pay attention to how actual people actually use things, rather than merely focusing on how we as technological designers may intend for things to be used.

3. Technological Mediation

We can deepen our thinking about techno-social systems and the social context of technology by exploring the Theory of Technological Mediation.[5] Technological mediation is a way of thinking about technology that aims to take technological artifacts seriously by putting our focus on what these artifacts do. This can sound like an odd approach, for most of us our used to purely instrumental ways of thinking about technology. On these instrumentalist approaches, technological artifacts do not do anything. Rather, they are simply passive tools (objects) used by humans (subjects) to achieve human ends. Put another way, the instrumental approach regards technological artifacts as dead matter upon and through which humans can exercise their will.

Mediation theory, however, orients us away from this instrumentalist view of technology as passive. Instead, it holds that technological artifacts play an active role in our lives by always mediating the way we engage with the world around us. And while this may seem uncontroversial when it comes to technological artifacts that seem to ‘act’, like robots or digital avatars, mediation theory suggests that this is true of all technological artifacts. Even simple artifacts like hammers, glasses, or the fountain pen mediate our lives.

Technological artifacts mediate two important aspects of our existence in the world: our perception of the world, and our actions in it. In so doing, technological artifacts change who and what we are.[6]

When technology mediates our perception of the world, it may amplify or reduce certain aspects of the world to be experienced. When technology mediates our actions in the world, its design or implementation is such that it is inviting, discouraging, or inhibiting certain actions. We can see this at play with a simple technological artifact: the hammer. The hammer invites certain actions like using it to drive nails (rather than attempting to press them with your bare hands) while discouraging others such as fastening two boards with glue.[7] The simple hammer also changes our perception of the world. There is, of course, the old adage that “when all you have is a hammer, everything looks like a nail”. But more precisely, we can notice that our focus is drawn to the head of the nail, searching out a proper contact point between hammer and nail as much of the rest of the world fades out of view. When holding a hammer, the world becomes more “hammerable” than it was before: certain features stand out as more or less inviting to my hammer use. In both subtle and less subtle ways, engaging with the world in a way mediated by the hammer changes that engagement in profound ways.

Ethics is traditionally concerned with what humans, or other moral agents, do. In the context of technology, this often involves asking how humans should or should not use technological artifacts. But if technology mediates our interaction with the world in the ways just discussed, then we should expand our ethical thinking beyond what humans do and ask: what do technological artifacts do? Asking this sort of question can open up two useful avenues for social and ethical reflection.

First, mediation theory can enhance our moral perception and imagination. It does this by helping us identify and describe morally salient aspects of human-technology relations that would otherwise remain hidden. Mediation theory encourages us to ask ethical questions about the way technology enhances and diminishes our perception of the world and the ways it invites and inhibits our actions in the world. Some technologies inhibit actions we want inhibited and direct our focus in helpful ways. But other technologies may encourage actions we would rather people avoid or discourage us from paying attention to morally important issues.

Second, mediation theory can enhance our moral reasoning abilities. Once we become aware of the ways that technology mediates our perception of and interaction with the world, we are now in a position to take seriously our responsibilities to design, implement, and interact with technology with that mediation in mind. Thus, once we realize that technology can be used to discourage or even outright forbid certain actions, we can now ask under what conditions technology should discourage or forbid such actions. Thus, now when we reason about how to design or implement technology, we are in position to pay closer attention to its design features rather than largely off-loading our ethical thinking to the mere use of the technology.

In short, mediation theory suggests that it is the moral responsibility of technology designers to predict and anticipate the mediating effects of their designs and to design their technologies to mediate well.

Example of Technological Mediation

In Moralizing Technology: Understanding and Designing the Morality of Things, Peter-Paul Verbeek illustrates technological mediation by detailing how an obstetric ultrasound alters our moral perception and reasoning.

From a purely instrumental perspective (i.e., absent the benefits of mediation theory), we may simply describe an ultrasound as a tool for providing visual access to a fetus in utero. In this way it enhances our perception and can provide us with access to certain types of potentially relevant information, such as the fetus’s health.

But once we consider the ultrasound with mediation theory, asking what an ultrasound does as a piece of technology (rather than just what do humans do with it as a tool), we gain new insight.

First, consider perception: an ultrasound casts the fetus as a distinct individual unborn baby. It does this, first, by encouraging us to focus on the display screen rather than the mother, thereby separating the fetus from the mother in our minds. Additionally, the ultrasound screen enhances the size of the fetus such that it appears to be much closer to the size of a newborn infant. This all suggests how an ultrasound changes our perception of the world (and, in particular, the fetus and its relation to the mother). But it also changes our moral perception: the existence of the ultrasound and its ability to detect features of the fetus encourages the further medicalization of pregnancy and establishes the womb as a site of surveillance. Finally, by establishing the fetus as an independent unborn baby, the ultrasound contributes to a shift in how we think about the responsibilities of (prospective) parents. For even before the child is born it is now an independent being that must be regarded wholly independently from the mother.

Second, consider reasoning: being able to see the biological sex of the fetus as well as potential defects now opens up new avenues of moral thinking that are simply eliminated without the technology. Decisions about whether to continue with the pregnancy can now be made on the basis of the biological sex of the child or the presence of birth defects. Similarly, in contributing to the further medicalization of pregnancy, parents now become responsible for their choice of birthing methods in a way that is not possible if there simply are no choices.

This mediation analysis of the ultrasound suggests that designers should be asking a variety of questions that are likely currently ignored:

- Could the visual presentation of the fetus be altered to better reflect scale or the fetus’s interconnections with the mother?

- Could ultrasounds be designed in such a way that parents could use them at home, rather than necessitating involvement in the hospital system?

4. Technological Mediation & The Control Dilemma

Technological mediation theory tells us that technology influences people and society. And keeping that in mind can allow us to potentially predict what those influences may be prior to the (wide-scale) release of the technology into society. This is certainly a key reason to be familiar with the theory. However, it would be too quick to believe that we always can, in fact, predict how technology will affect society (or how society will affect technology). And a key explanation for this was first developed by philosopher David Collingridge and is now known as The Control Dilemma (or sometimes The Collingridge Dilemma after David Collingridge):[8]

The Control Dilemma, like all dilemmas, establishes two paths (“di-”), both of which are problematic in some way. We want to be able to control the introduction of technology into society to limit negative effects, but we cannot really know the effects until we introduce the technology into society. But if we introduce the technology into society to figure out the effects, then we are not in as good of a position to control the technology and its effects.

Thus, even as technological mediation theory and general awareness of the social context of engineering and technology empower us to better direct the development of technology and its introduction into society, the control dilemma suggests our power will always be somewhat limited.

The classic control dilemma is predominantly focused on “hard impacts” of technology: effects on health, safety, and the environment, etc. But technological mediation theory suggests that technology can also have “soft impacts” – it can effect, over time, our social values and principles, thereby not just affecting what we already care about (as in the hard impacts) but also changing what we care about. As such, philosophers Olya Kudina & Peter-Paul Verbeek have recently argued that there is a second version of the control dilemma that emphasizes these “soft impacts”:

In recent times, this moral control dilemma is perhaps best illustrated by the changing understanding of the value of privacy in light of recent digital technologies with substantial surveillance possibilities. Before the widespread existence of cell phones with cameras, most live music venues banned photos and videos. Now with the increasing use of video doorbells we are forced to ask what counts as a reasonable expectation of privacy on your own property. The increasing availability of consumer-level drones similarly raises questions about what privacy requires and why privacy is important. The upshot, then, is that although we may have started the development of these technologies with one framework for understanding privacy, by the time the technologies are created and introduced into society, they effectively have forced us to change our framework.

Refer back to the ultrasound example of technological mediation and you can similarly see how technology can force (or at least strongly encourage) us to change our moral frameworks. But, again, to reassert the dilemma: although we can know, in general, that technology may have this effect, it is unlikely that we can wholly anticipate the change or wholly control it.

The Control Dilemma in Action: Rebound Effects & Induced Demand

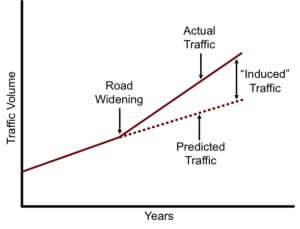

Compact Fluorescent and LED lightbulbs were introduced largely as a means of reducing energy usage related to lighting. But, in fact, they have had the opposite effect, increasing light-related energy usage.[9] Similarly, the typical response (in the United States at least) to traffic congestion is to increase the available lanes of traffic. The expected response would be a reduction in congestion as vehicles now have more space to spread out. But, in reality, the typical result is the opposite: an increase in congestion.[10]

These are both examples of two related phenomena which show the importance of reflecting on implicit mediations and their related unintended outcomes. In the first case, we are dealing with the Rebound Effect: The fact that increases in efficiency of a technology will often not result in (as much of) a reduction of resource usage because the increased efficiency leads people to see usage as “more acceptable”. The person who buys a more energy efficient vehicle and tells themselves, as a result, “I can now take those long road trips!” is evidencing the rebound effect. Importantly, the rebound effect describes both a situation (like the lightbulbs) where the resulting effect actually makes things worse than they were before as well as a situation where the resulting effect simply reduces the hoped for benefits. Thus, the rebound effect can both produce negative mediation outcomes as well as simply reducing the positive mediation outcomes.

An increase in vehicle congestion resulting from more traffic lanes is a type of rebound effect known as Induced Demand. The basic idea, in the context of traffic engineering, is that traffic congestion almost always maintains a particular equilibrium: traffic volume increases until congestion delays discourage additional peak-period trips. Adding additional lanes, therefore, simply provides an opportunity for increased traffic volume until that same equilibrium is reached. Thus, although the goal of expanding the roadway was a reduction in congestion the result is no such reduction, and potentially an increase. Although induced demand is most commonly discussed in the context of traffic engineering, the phenomenon can apply to any sector of the economy. In other contexts it often just describes a situation where demand for some good increases as cost of the good decreases.

Both the rebound effect and induced demand provide striking examples of unintended technological mediations. Additionally, because understanding both requires understanding social dynamics, economics, and human psychology, they show the importance of broad understanding of the humanities and social sciences to effective technological design.

5. Assessing and Designing Technological Mediations

Although the Control Dilemma should humble us in believing we could ever fully know the mediating effects of a technology, that does not mean we cannot become better able to anticipate mediating effects as well as design for desired mediating effects. To do that, however, we must develop and deploy our moral imaginations. We do this, partly, through developing a broad understanding of people and society via humanistic and social scientific inquiry. But we can also do this through knowing what questions to ask. Since the broad understanding of people and society goes well beyond the scope of this book, we will focus instead on developing a quasi-systematic “framework” for asking the right questions.

To craft this framework, we can divide mediation analysis into four elements:

- Intended Mediations: The influences on perception and/or behavior that the designers explicitly intend and hope to design into the artifact

- Implicit Mediations: The influences on perception and/or behavior that the artifact may unintentionally have due to its design and/or its use context

- Forms of Mediation: The specific methods used to mediate perception and/or action

- Outcomes of Mediation: The actions, decisions, and broader social changes that result from the mediations

Each of these four elements are of course related. To see how, consider the case of the speed bump. In designing and installing a speed bump, we intend to influence behavior, specifically by making vehicles slow down (an intended mediation). To do this, we use a technology that effectively forces the driver to slow down (or risk damaging their vehicle). This is the form of mediation (more on forms below). So we hope to force people to slow down, presumably with the broader outcome being that people slow down in important areas and therefore safety is enhanced. That is at least one description of the outcome of mediation. But, of course, whether it is indeed the outcome depends on the actual social context. Just because we intend to mediate behavior in a certain way to produce a certain outcome does not mean we will succeed. Or, even if we do succeed, it does not mean we will not also produce other outcomes as a result of our intended or implicit mediations.

So, to more fully develop our analysis, we would also want to think about potential implicit mediations as well as, to whatever degree possible, actually study the artifact (or similar artifacts) in the actual world to know actual outcomes. When it comes to implicit mediations, though, we can try to anticipate them by thinking slowly and deeply about how interaction with the artifact may influence perception or behavior. For instance, if we assume some people will not like to have to slow down due to the speed bump, then we can anticipate that a potential implicit mediating effect of our speed bump will be to divert traffic to another street (if an alternative exists). In this way, a speed bump encourages (some) drivers to alter their commute. The inevitable outcome of that, beyond merely increasing traffic on some other roadway, will be a matter of a variety of contextual factors and so would require some specific research. But, by engaging our moral imagination we were able to set ourselves up for that additional research. Perhaps the street that will see increasing traffic is well under capacity and so we are fine with creating an increase. But perhaps it is an area with a school and so the increase capacity would heighten risks for pedestrians. Determining this sort of thing is where the technical competencies of the relevant engineers or computer scientists become important.

We can reconstitute the above analysis into our 4 elements in the following way:

- Intended Mediation: Decrease automobile speed on the street

- Implicit Mediation: Shift more traffic to an alternative street

- Forms of Mediation: Intended mediation is coercive while the implicit mediation is persuasive

- Outcomes of Mediation: Although the precise outcomes we cannot know until later, the predicted outcomes are an increase in safety for all modes of transport on the street with the speed bump and an increase in traffic (requiring further study) on an alternative route

This is, of course, only a partial analysis. We may be intending other mediations with our speed bump and there will almost certainly be other implicit mediations and therefore other outcomes. But, this partial analysis should provide a useful example of how to engage in a Mediation Analysis. To further enhance our abilities, however, we can examine each of the four elements in a bit more detail to identify common questions we may want to ask and common results we may want to be on the lookout for.

5.1. Intended & Implicit Mediations

Whether a mediation is intended or not, we will tend to ask many of the same questions in order to identify and understand it. Most broadly, we are aiming to answer the following sorts of questions:

- How will this technology alter the perceptions of those who use or interact with it? Where does it direct their attention during use or interaction? What does it direct them away from paying attention to during use or interaction?

- How will this technology alter the actions of those who use or interact with it? What sorts of actions does it make more likely? What sorts of actions does it make less likely?

- How will this technology alter the way individuals, groups of people, or society at large interpret and perceive their world? How might it change their understanding of themselves or their world through the changes in perception and/or action it generates in users?

It is important to notice that the first two sets of questions direct us to focus on those interacting with the technology. Mediation always begins with those in contact with the technology, even as it will typically end up having mediating effects even for those who never interact with it. The third set of questions are placed third for a reason, then, as they encourage us to reflect on how interactors will be mediated in order to think about broader changes that we may produce. It is important to focus first and foremost, and most heavily, on the mediating effects for interactors, since those tend to be the result of specific design decisions and are thus most directly open to change through re-design.

5.2. Forms of Mediation

In the speed bump example above we saw two different forms of mediation: coercion and persuasion. Broadly speaking, there are many different forms of mediation and so we cannot exhaustively list them all. Instead, we can identify three broad forms mediation may take. All forms of mediation function as “scripts” that make certain perceptions or actions more or less likely. In this way, they all attempt to generate certain types of perceptions or actions. However, it is worth noting that some forms are more “heavy handed” than others. Thus, in laying out the three general forms below, we will work from the “least heavy handed” to the “most heavy handed”. Thinking in this way is helpful since one major concern raised by the fact of technological mediation is that it reduces human freedom. This is certainly true, in much the same way that laws or social norms also reduce human freedom, but the more “heavy handed” forms of mediation are heavy handed precisely in the fact that they reduce freedom more (or more strongly reduce freedom).[11]

5.2.1. Guidance

Much of our technologically mediated world involves forms of guidance: we use images or other tools to indicate to people how to interact with a product or how to use the technological artifact to achieve their ends. Consider all the signage in a building: bright exit signs, labels on doors that say “push” or “pull”. Many signs include images, text, and braille to ensure people are guided in multiple ways.

When we use Guidance Design, our goal is simply to facilitate people in doing what they want to do. We are not encouraging them to do anything in particular, but rather making it easier for them to do what they already want to do. In some cases we are guiding interaction with the technological artifact itself: labeling a button ‘power’ or using a symbol that designates power simply guides the user who may want to power on the device. In other cases we are guiding interaction with the larger social world: textured walking strips at curbs help those who are sight-impaired navigate the world.

The most interesting forms of guidance mediation come when an artifact is designed in such a way as to guide usage without explicit signage. So, consider, that in lieu of putting “push” or “pull” on a door, the door is designed in such a way that for most people they immediately know whether it needs to be pushed or pulled to open. Car door handles, as illustrated above, function in this way: there is no sign telling you how to interact with the handle; instead, the handle is structured in such a way that you are guided to use it for its proper function.

As the least “heavy handed” form of mediation, guidance is generally considered the most open to failure. Each of you has probably had the experience of attempting to open a door in the way you thought appropriate only to find out it opens the other way. However, if “failure” is not a big deal (for instance, you just attempt to open the door the other way) then we may prefer guidance over more heavy handed approaches.

5.2.2. Persuasive Design

Sometimes our goal is not simply to help people do what they already want to do, but to actually encourage them to do something they otherwise would not do (or, at least, would be less likely to do). For instance, in the Netherlands some of the live speed checking signs on roads output a “sad face” when the driver is exceeding the speed limit. The goal, of course, is to encourage the driver to slow down.

Persuasive design engages peoples’ minds just like guidance design, but does it with more of a ‘push’. Whereas in guidance we assume the person already wants to do the thing we are guiding them to do with persuasion our default assumption is the person would not otherwise do the thing we are now persuading them to do. Of course, that doesn’t mean everyone who interacts with the technology actually needs persuasion, but in general we assume people will need some sort of ‘push’ to engage in and continue the behavior. Nonetheless, it is important to note that persuasive design does not force any sort of behavior, it simply aims to make it more likely.

Persuasive design is sometimes split into two sub-categories: Persuasive design and Seductive design. The relevant distinction here is whether the design attempts to engage the user as a rational person to encourage them to choose to engage in the desired action or whether it engages them non-cognitively as a means of “seducing” them into doing the desired action without any real reflective choice. We can see the difference when thinking about two different approaches to reducing automobile speed. The “sad face” speed checker, illustrated above, is an example of persuasive design. For it to be effective at all the driver must pay attention to it and then process the information it is providing. An alternative approach would be to design the street in such a way that it simply makes driving at the desired speed the most attractive option. For instance, we may add curves to the road, narrow it, or add a tree lawn. Each of these has known behavioral effects on most people: it makes driving slower the more attractive option even as no driver is asked to “decide” to drive slower. As a side-note, to complete the example, if we simply install a sign indicating the desired speed limit, we would be using guidance, largely relying on people to already want to drive the “safe speed” (of course we are assuming that posted speed limits represent the “safe speed”, which is dubious).

For our purposes, we will just include seductive design under the heading of persuasive design. Nonetheless, it is worth keeping in mind the difference. Some would suggest that seductive design is “more heavy handed” than persuasive design, since it doesn’t attempt to engage our reasoning abilities. However, both forms of design are about encouraging certain perceptions or actions, even if their methods are a bit different.

Persuasive design (including seductive design) can be used for good or ill. Some companies have embraced persuasive design to “persuade” users to use devices more: smartphone “addiction” is a real thing. Often these uses of persuasive design aimed at making people engage with the technology more are dubbed “addictive design” to emphasize the nefarious aim of encouraging people to become ‘addicted’ to the technology.

But persuasive design need not focus on persuading a person to simply interact with the technology more. Instead, as the earlier examples indicate, persuasive design can be aimed at helping people develop good habits like regularly engaging in physical activity or turning off electronics at a certain point in the night.

5.2.3. Coercive Design

In some cases we need to effectively guarantee people will engage in certain behaviors. Although persuasive design makes it more likely than guidance, persuasive design still leaves open the possibility that people do not do what we are wanting them to do. In these cases, we may turn to coercive design.

If it is essential that drivers slow down, a “sad face” speed checker is not going to do the job. Instead, we may install speed bumps as they force a driver to slow down (lest they have no care about their vehicle). Or, if we are designing dangerous machinery and want to ensure it cannot be operated without someone actively present, we might require someone to always have their foot on a pedal and hand on a button for the machinery to work. We could use persuasive design to achieve the same goal, but it is likely a few people would lose fingers and toes in the process. Coercive design, on the other hand, effectively eliminates any thinking or choice by the person interacting with the technology.

To put things more broadly, coercive design involves design features aimed at requiring or eliminating certain behaviors or perceptions. The examples above were all about requiring certain behaviors, but we can also see coercion at play in eliminating certain behaviors (at least among certain people): some of the overpass bridges constructed on Long Island in New York were intentionally designed to be too low for city busses to pass through. This was done by the designer as a means of keeping low-income people away from Long Island beaches. Similar things occur today in the use of Hostile Architecture, such as park benches with a bar in the middle that prevents anyone (typically the homeless) from sleeping on the bench.

We should be clear that coercive design need not always necessarily guarantee the desired action. Instead, it may effectively guarantee it by providing some sort of negative feedback. This is, of course, how speed bumps work. Strictly speaking, someone could go over them without slowing down, but we still consider the design coercive since the result of not slowing down is likely to be damage to the vehicle. This is in contrast to persuasive design which may provide some sort of positive feedback for compliance but does not involve any sort of damaging or harmful negative feedback for non-compliance.

5.3. Outcomes of Mediation

Now that we know some of the core questions to ask to determine what sorts of mediations may occur, and we have a taxonomy of different types of mediations, the final step of our analysis would be in identifying the predicted and actual outcomes of the intended and implicit mediations. To do that, we can ask the following sorts of questions:

- If the mediations work as predicted, what are the short- and long-term effects on both those who interact with the artifact and society more broadly?

- Given other things we may know about the use context, are there any undesirable outcomes we can predict as a result of our mediations?

- What values are we promoting or protecting through our mediations? What values are we diminishing or frustrating?

- Is our form of mediation proportional to our expected or actual outcome? Or should we use a less “heavy handed” form of mediation?

To put these questions in context, consider a previous example of coercive design: the requirement that a person simultaneously have their foot on a pedal and hand on a button for a dangerous piece of heavy machinery to function. There is likely to be some implicit mediations from this, but we’ll focus on the intended one: requiring someone to be present and actively involved in the functioning of the machinery. If that works as predicted, we can expect the short and long-term effects to be increased workplace safety, thus promoting the value of (human) health. We may also predict some undesirable outcomes in the form of “cheating” the system by perhaps putting heavy objects on the pedal and button so it can function unattended. In this case, that would just cut against our improvements in human health, but is unlikely to eliminate those benefits. We are, however, frustrating a value like individual freedom by forcing a person to interact with the machinery in a particular way. Given all this, is coercive design justified? Or should we perhaps re-design the machinery using guidance or persuasion as the means of promoting safety? The answer to that question may be complex, but to begin answering it we would want to be thinking about the trade-offs between the value(s) promoted and the value(s) frustrated, as well as the same trade-off given alternative designs. If guidance or persuasive design would significantly reduce the benefits to human health – they would increase workplace injuries – then coercion may be justified. But this is partly because the value being promoted is human health, a value that is very important and (basically) universal. If we were instead promoting some other value of less importance, then the cost of individual freedom may not be worth it.

At this point we are moving into the realm of Mediation Evaluation, but we do not yet have (all the) tools necessary to evaluate mediations fully. Those will come later, but for now we can emphasize that by thinking in terms of the four elements of mediation and asking the appropriate questions or applying the appropriate taxonomy we can produce a reasonably comprehensive Mediation Analysis. This analysis does not, on its own, fully work out what we ought to do, but it is essential to doing that. And by splitting the analysis off from the evaluation, we are more likely to produce a robust analysis and therefore (later) a better evaluation.

Check Your Understanding

After successfully completing this chapter, you should be able to answer all the following questions:

- What does it mean for engineering and technology to function in a social context? What are some examples of engineering/technology functioning in a social context?

- What are disruptive technologies and how do they illustrate the idea that engineering and technology function in a social context?

- What is a technological artifact? How do they relate to techno-social systems?

- What does Technological Mediation Theory tell us about technological artifacts?

- What is The Moral Control Dilemma? How does it relate to the standard Control Dilemma?

- What is Induced Demand? How does it relate to the Rebound Effect? And how to both relate to Technological Mediation

- What are the four elements of a Mediation Analysis? What sorts of questions might we ask for each element?

- What are the three main forms of mediation? Construct your own example of each

References & Further Reading

Collingridge, D. (1980). The Social Control of Technology. Continuum International Publishing.

de Boer, B., Hoek, J., & Kudina, O. (2018). “Can the technological mediation approach improve technology assessment? A critical view from ‘within’,” Journal of Responsible Innovation 5(3): 299-315.

Dorrestijn, S. (2017). “The Care of our Hybrid Selves: Ethics in Times of Technical Mediation,” Foundations of Science 22(2): 311-321.

Hauser, S., Oogjes, D., Wakkary, R., & Verbeek, P.P. (2018). “An annotated portfolio on doing postphenomenology through research products,” DIS 2018 – Proceedings of the 2018 Designing Interactive Systems Conference, 459-472.

Kudina, O. & Verbeek, P.-P. (2018). “Ethics from Within: Google Glass, the Collingridge Dilemma, and the Mediated Value of Privacy,” Science, Technology, & Human Values 44(2): 291-314.

Verbeek, P.-P. (2005). What Things Do: Philosophical Reflections on Technology, Agency and Design. The Pennsylvania State University Press.

Verbeek, P.-P. (2011). Moralizing Technology: Understanding and Designing the Morality of Things. The University of Chicago Press.

Winner, L. (1980). “Do Artifacts have Politics?” Daedalus 109(1): 121-136.

- The discussion that follows was originally presented by Mike Martin and Roland Schinzinger in Ethics in Engineering (1989) ↵

- Lord, 1976; Wade, 1980; Davie, 1986. ↵

- Trevor J. Pinch & Wiebe E. Bijker (1984). “The social construction of facts and artefacts: or How the sociology of science and the sociology of technology might benefit each other,” Social Studies of Science 14.3: 399-441. ↵

- Daniel Miller (2010), Stuff. Polity Press. ↵

- This section is inspired by, and portions of it taken from, Dr. Jan Peter Bergen's "Technological Mediation and Ethics", published by the 4TU Centre for Ethics and Technology under a CC-A-SA license. ↵

- In mediation theory, this is an aspect of the "co-constitution of humans and artifacts". Without human subjects, there would be no artifacts. However, without technological artifacts, human subjects as we know them would likewise not exist. There is an ongoing co-shaping of humans and technology. ↵

- The hammer may invite certain other actions as well, such as the use of it as a paperweight. This connects to the idea of multistability, which indicates that specific artifacts invite a specific, varied, but limited set of actions, some of which the artifact was not specifically designed for. ↵

- David Collingridge (1980). The Social Control of Technology. London: Frances Pinter. ↵

- Joachim Schleich, et al. (2014). A Brighter Future? Quantifying the rebound effect in energy efficient lighting, Energy Policy 72: 35-42. ↵

- Todd Litman (2022). "Generated Traffic and Induced Travel: Implications for Transport Planning," Institute of Transportation Engineers Journal 71(4): 38-47. ↵

- The tool and techniques described here are derived from the De-scription activity created by Jet Gipson and the Product Impact Tool created by Steven Dorrestijn & Wouter Eggink. Further inspiration is taken from Peter-Paul Verbeek's Moralizing Technology: Understanding and Designing the Morality of Things. ↵