Chapter 14. Generative Artificial Intelligence (GenAI) and Project Management

14.1 Generative AI (GenAI)

14.1.1 Generative AI (GenAI)

GenAI refers to a subset of artificial intelligence (AI) technologies that generate new content—text, images, music, code, and other forms of data—by learning from existing patterns and examples. Unlike traditional AI models (i.e., classification, regression, and clustering) that primarily classify, predict, or analyze data, GenAI models can create novel outputs that mimic the data they were trained on[6].

GenAI is powered by advanced machine learning techniques, particularly neural networks like transformers, which enable these systems to process large datasets and produce original content based on patterns learned during training[7].

Major types of GenAI models are:

-

Large Language Models (LLMs)

- LLMs are designed to understand and generate human-like text based on large datasets. They can perform a wide range of language tasks, such as text completion, summarization, translation, and answering questions. Most modern LLMs are based on transformer architectures but may also use autoregressive generation methods.

- Examples: ChatGPT by OpenAI[8], Gemini by Google[9], Claude by Anthropic[10], LLaMA by Meta[11], and ERNIE by Baidu[12].

- Transformer-based Models

- Transformer models are neural network architectures that excel at processing sequential data. While commonly used in LLMs, transformer models can also be adapted for other generative tasks, such as image generation, speech synthesis, and music composition.

- Examples: The first version of DALL·E for image generation, ElevenLabs for speech generation[13], SOUNDRAW for music generation[14], and Microsoft GitHub Copilot for code generation.

- Autoregressive Models

- Autoregressive models generate outputs step by step, with each step conditioned on the preceding outputs. In text generation, for example, the model predicts the next word in a sequence based on the words that have already been generated. Many LLMs like GPT combine transformer architecture with an autoregressive generation process.

- Examples: GPT for text generation and WaveNet for audio generation.

-

Diffusion Models

- Diffusion models are generative models that gradually denoise random noise to generate high-quality images. Due to their ability to create highly detailed and realistic images, diffusion models have become popular for image-generation tasks.

- Examples: DALL·E 2 and 3 (incorporated into ChatGPT)[15], Stable Diffusion[16].

-

Generative Adversarial Networks (GANs)

- GANs consist of two neural networks— a generator and a discriminator— that work together to generate realistic data such as images, videos, and audio. The generator creates data, while the discriminator evaluates its authenticity.

- Examples: StyleGAN for realistic image generation and DeepFake models for video manipulation.

-

Variational Autoencoders (VAEs)

-

- VAEs generate new data similar to a training set by encoding input data into a compressed representation and then generating data from this compressed format.

- Examples: VAEs are applied to medical image generation and video game design.

14.1.2 Relationship of GenAI with AI in General

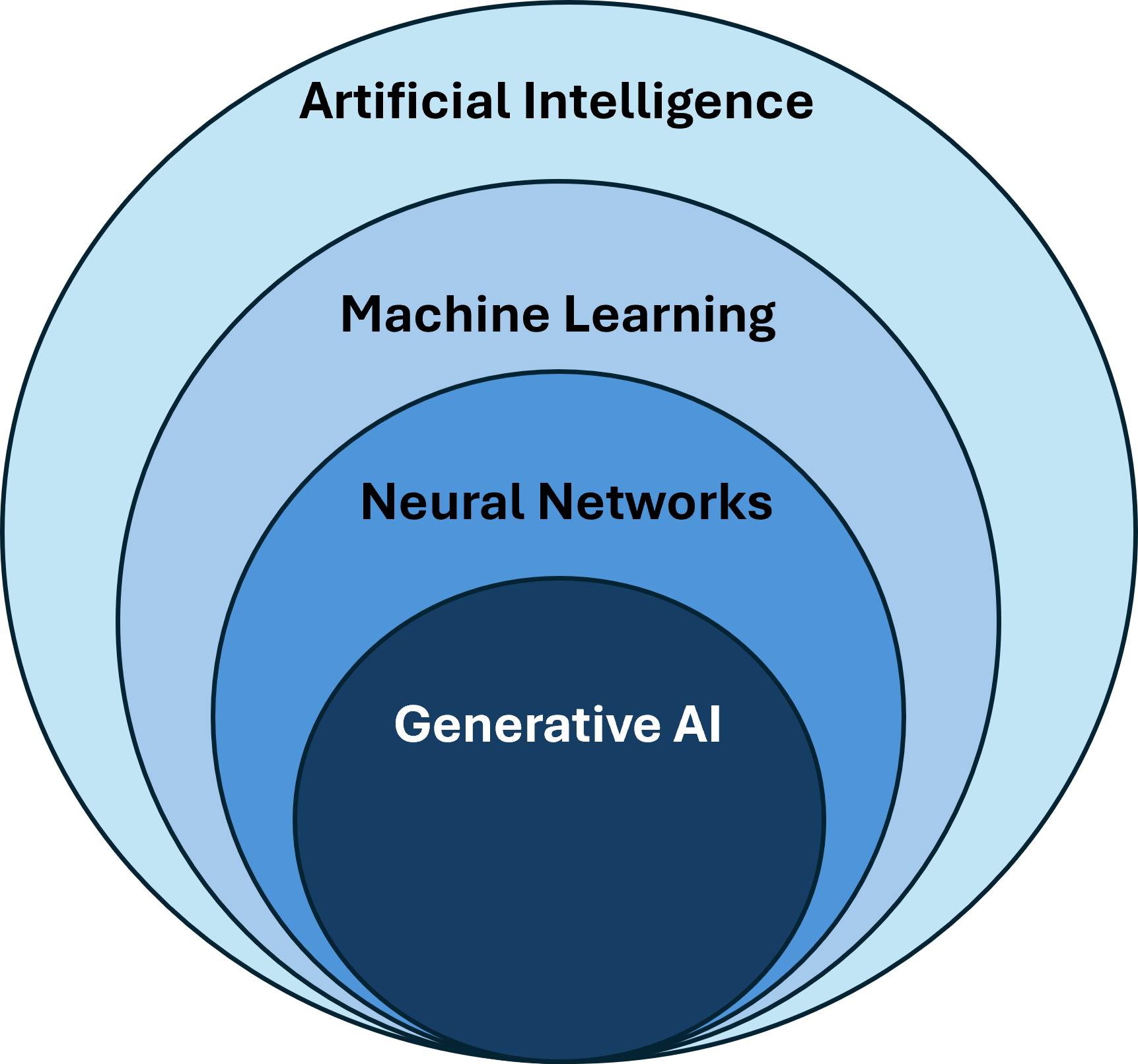

GenAI is a specialized branch of AI, existing as one of many applications within the broader field of artificial intelligence (Figure 14.1.1). Here’s how it fits into the AI ecosystem:

-

Broader AI Scope:

- Narrow AI (also known as weak AI) encompasses a wide range of capabilities, including:

- Reactive machines that respond to specific stimuli (e.g., recommendation systems used in online platforms like Netflix and Amazon).

- Expert systems that can make decisions or predictions based on predefined rules (e.g., AI used in medical diagnostic tools).

- Learning systems that improve performance based on experience, including methods like reinforcement learning, supervised learning, and unsupervised learning[17].

- Narrow AI (also known as weak AI) encompasses a wide range of capabilities, including:

General AI vs. Narrow AI

General AI, or Artificial General Intelligence (AGI), is an advanced form of AI that can understand, learn, and perform any intellectual task a human can do. Unlike narrow AI, designed to excel at specific tasks like language translation or image recognition, general AI is adaptable and capable of transferring knowledge across different domains without requiring task-specific training. While narrow AI is widely used today, general AI remains a theoretical concept, aiming to replicate human-like reasoning, creativity, and problem-solving on a broad scale.

-

- GenAI represents a distinct and advanced application within narrow AI. While traditional narrow AI models focus on tasks like classifying or predicting data, GenAI creates new content—text, images, videos, and music—by identifying and mimicking patterns learned during training.

- Generative AI vs. Traditional AI:

- Traditional AI models are typically designed for classification (e.g., detecting spam), regression (e.g., predicting sales), and optimization (e.g., routing logistics). They often rely on identifying patterns within structured datasets to assist with decision-making or predictions[18].

- GenAI, in contrast, focuses on generating new data that adheres to the learned patterns, which may include producing text, video, audio, code, or even entire 3D models[19]. It is creative in nature, aiming to extend beyond analyzing existing data to creating entirely new, realistic outputs.

- Core Techniques:

- Machine Learning: Both narrow AI and GenAI rely on machine learning, but generative models are often based on deep learning architectures like neural networks. In particular, transformer models have revolutionized GenAI by enabling a large-scale understanding of language and images[20].

- Training and Learning: GenAI models are trained on vast datasets, such as text corpora for language generation or image databases for image creation, and are designed to replicate the patterns and features observed in the training data[21]. This allows them to generate novel content consistent with the training examples.

- Self-Supervised Learning: GenAI models often utilize self-supervised learning, which allows models to learn useful representations of data by predicting parts of the data itself. This method is crucial for tasks like text generation, where models predict the next word in a sequence.

The relationships between different AI levels and types are demonstrated in Figure 14.1.1 below.

- Artificial Intelligence (AI) (outermost layer):

- This represents the broadest concept. AI encompasses any machine or system capable of performing tasks that typically require human intelligence, such as problem-solving, reasoning, and learning. AI includes various approaches and methods, such as machine learning, rule-based systems, and expert systems.

- Machine Learning (ML) (next layer):

- A subset of AI, machine learning refers to systems that improve their performance on a task through experience, meaning they can learn from data. These systems use algorithms to identify patterns in data and make predictions or decisions without being explicitly programmed for specific tasks.

- Neural Networks (next layer):

- Within machine learning, neural networks are a class of models inspired by the structure and functioning of the human brain. They consist of interconnected nodes (neurons) organized in layers that process input data and learn to perform complex tasks, such as image recognition or language translation.

- Generative AI (innermost layer):

- GenAI is a specific application of neural networks and machine learning.

- Retrieved from https://openai.com/index/chatgpt/ ↵

- Retrieved from https://www.statista.com/chart/29174/time-to-one-million-users/ ↵

- .Retrieved from https://www.theverge.com/2024/8/29/24231685/openai-chatgpt-200-million-weekly-users ↵

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. In 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA. https://arxiv.org/abs/1706.03762 ↵

- .Retrieved from https://www.weforum.org/agenda/2023/08/ai-artificial-intelligence-changing-the-future-of-work-jobs/ ↵

- Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., ... & Liang, P. (2021). On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258. ↵

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. In 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA. https://arxiv.org/abs/1706.03762 ↵

- https://chatgpt.com/ ↵

- https://gemini.google.com/app ↵

- https://claude.ai/ ↵

- https://www.llama.com/ ↵

- https://yiyan.baidu.com/ ↵

- https://elevenlabs.io/ ↵

- https://soundraw.io/ ↵

- https://openai.com/index/dall-e-3/ ↵

- https://stability.ai/ ↵

- Russell, S. J., & Norvig, P. (2021). Artificial intelligence: A modern approach (4th ed.). Pearson. ↵

- Russell, S. J., & Norvig, P. (2021). Artificial intelligence: A modern approach (4th ed.). Pearson. ↵

- Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., ... & Liang, P. (2021). On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258. ↵

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. In 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA. https://arxiv.org/abs/1706.03762 ↵

- Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D., Wu, J., Winter, C., ... Amodei, D. (2020). Language models are few-shot learners. In 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada. https://arxiv.org/abs/2005.14165 ↵